Cinc as highly available cluster

Introduction

For most use cases a single instance of CINC will cover all your needs. It is a tool after all, and not a service that processes client traffic.

But, if for some of you out there, having a single instance of anything is not acceptable, or if in your infrastructure CINC plays a crucial role, there is a way to have CINC in a highly available clustered setup.

In this article we will go through setting up this on VM’s in your private cloud or with some cloud provider. We will need 6 to 10 VM’s. I will be setting it up on Rocky Linux 8.

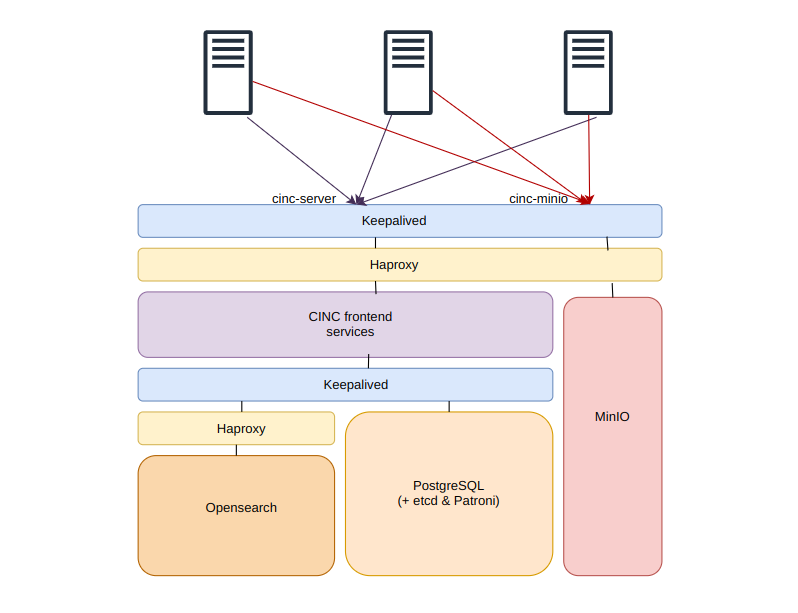

Architecture

In order to achieve a highly available setup we’ll need to separate all services with persistent data to dedicated clusters.

We will move Opensearch to its own cluster.

PostgreSQL will also be an external cluster, with additional installations of etcd and Patroni for cluster management and failover.

Bookshelf service which stores Cinc cookbooks will be replaced with another object storage service, MinIO.

If you have your infrastructure in AWS cloud, you can use S3 bucket for this purpose as well. In this article we will be using MinIO.

Every cinc-client will need to be able to connect to your Cinc server to authenticate and upload data at the end of the run, as well as your object storage service to download cookbooks (MinIO/S3).

The cluster service schema looks something like this.

Frontend servers

On frontend we will be installing services without persistent data with cinc-server-ctl command on 2 servers.

Services: nginx, oc_bifrost, oc_id, opscode-erchef, redis_lb

frontend-1

frontend-2

Backend servers

Backend servers will host services with persistent data, each in clustered setup. We will install all of them on 4 servers, so each service cluster will have 4 members.

Services: opensearch, postgresql(+ etcd, patroni), minio

backend-1

backend-2

backend-3

backend-4

Balancers

If you are not running this in a public cloud you can use keepalived and haproxy.

If you are in a public cloud, you can replace this with cloud native balancers. So 4 servers less. If you’ll be using them, the first order of business is to deploy them and configure a virtual IP on keepaliveds to point to haproxies.

I won’t go into the details of haproxy and keepalived setup to keep the article focused, I will only go through relevant information for service balancing. Check references for details on balancers.

keepalived-1

keepalived-2

haproxy-1

haproxy-2

Certificates

In this setup we will make sure all communication inside the cluster of each service is encrypted.

Each server will have a fully qualified domain name. If you don’t have a DNS server, you can add servers to /etc/hosts file.

Use openssl to generate your certificates from the same self-signed root CA and you can use the same ones for all your service clusters.

Make sure you add this root certificate to your servers trust store in order for certificates to be trusted.

If you don’t want to add this root certificate to all of your servers in infrastructure, you can use these self-signed certificates only for inside-cluster communication.

TLS termination for traffic from cinc-clients can happen on haproxy service, with publicly trusted certificates hosted there.

Opensearch

Installation

We will first start setting up our backend (data persistent) services.

Add opensearch repo. Currently supported version of Opensearch is 1.x. in Cinc 15.9.38 version. Before proceeding, check which one is supported in the version you will be using. In this setup we will be using 1.3.18.

curl -SL https://artifacts.opensearch.org/releases/bundle/opensearch/1.x/opensearch-1.x.repo -o /etc/yum.repos.d/opensearch-1.x.repo && dnf install opensearch -y

Once installed create a separate /data partition to make sure that data cannot fill out the root partition. We will use /data for all backend services. Then setup /data/opensearch dir and adjust ownership:

mkdir /data/opensearch && chown opensearch:opensearch /data/opensearch

Configuration

Once service is installed, we need to set up certificates. Here I will be using the ones we generated for server fqdns. You need to have 3 seperate files, one for full certificate chain, private key and root-ca certificate.

Additionally, you could generate one more certificate to be used for admin user authentication if you wish to do that. Referenced by admin_dn part of the configuration.

Put them in /etc/opensearch/ and make sure the opensearch user has permissions to read them.

Use this configuration as a template for configuring your opensearch cluster. Configure all 4 servers.

cluster.name: cinc-opensearch

node.name: backend-1.example.com

path.data: /data/opensearch

path.logs: /var/log/opensearch

network.host: 0.0.0.0

discovery.seed_hosts: [ "backend-1.example.com", "backend-2.example.com", "backend-3.example.com", "backend-4.example.com" ]

cluster.initial_master_nodes: [ "backend-1.example.com", "backend-2.example.com", "backend-3.example.com", "backend-4.example.com" ]

node.master: true

plugins.security.allow_default_init_securityindex: true

plugins.security.ssl.transport.pemcert_filepath: full-chain.crt

plugins.security.ssl.transport.pemkey_filepath: private.key

plugins.security.ssl.transport.pemtrustedcas_filepath: root-ca.pem

plugins.security.ssl.transport.enabled_protocols:

- "TLSv1.2"

plugins.security.ssl.transport.enforce_hostname_verification: false

plugins.security.restapi.roles_enabled: ["all_access", "security_rest_api_access"]

plugins.security.system_indices.enabled: true

plugins.security.system_indices.indices: [".opendistro-alerting-config", ".opendistro-alerting-alert*", ".opendistro-anomaly-results*", ".opendistro-anomaly-detector*", ".opendistro-anomaly-checkpoints", ".opendistro-anomaly-detection-state", ".opendistro-reports-*", ".opendistro-notifications-*", ".opendistro-notebooks", ".opendistro-asynchronous-search-response*", ".replication-metadata-store"]

plugins.security.authcz.admin_dn:

- "CN=A,OU=MyOrganizationalUnit,O=MyCompany,L=MyCity,ST=MyState,C=US"

plugins.security.ssl.http.enabled: true

plugins.security.ssl.http.pemcert_filepath: full-chain.crt

plugins.security.ssl.http.pemkey_filepath: private.key

plugins.security.ssl.http.pemtrustedcas_filepath: root-ca.pem

plugins.security.ssl.http.enabled_protocols:

- "TLSv1.2"

plugins.security.nodes_dn:

- "CN=backend-1.example.com,OU=MyOrganizationalUnit,O=MyCompany,L=MyCity,ST=MyState,C=US"

- "CN=backend-2.example.com,OU=MyOrganizationalUnit,O=MyCompany,L=MyCity,ST=MyState,C=US"

- "CN=backend-3.example.com,OU=MyOrganizationalUnit,O=MyCompany,L=MyCity,ST=MyState,C=US"

- "CN=backend-4.example.com,OU=MyOrganizationalUnit,O=MyCompany,L=MyCity,ST=MyState,C=US"

http.max_content_length: 1024mb

Then, not to have password for admin be admin(default), export the environment variable of initial password and start and enable service:

export OPENSEARCH_INITIAL_ADMIN_PASSWORD=newpassword && systemctl start opensearch && systemctl enable opensearch

I will be using this Opensearch cluster dedicated to Cinc only. If you were to share this cluster among multiple services best practice would be to have multiple users with granulated roles.

Let’s check the health of the cluster to make sure we have no issues.

curl -XGET -u $user:$pass -H "Content-Type: application/json" 'https://backend-1.example.com:9200/_cluster/health?pretty'

Additionally, if you have more than 10 000 nodes, you will need to expand max_result_window. More on this shortly.

curl -XPUT -u $user:$pass -H "Content-Type: application/json" https://backend-1.example.com:9200/chef/_settings -d '{ "index" : { "max_result_window" : 100000 } }'

Balancing

For balancing we will be using L4 balancing on keepalived forwarded to L7 balancing on haproxy.

Here will also resolve an Opensearch optimization that shows up if you have more than 10 000 servers in your infrastructure.

Knife search will return only 10k results.

Opensearch, in order to speed up searches, capped all results to 10 000.

If you want more results, you need to send track_total_hits=true with each request, and expand max_result_window as we did in the previous step.

You can fix this for now, until fixed permanently, by rewriting the search path on balancer.

We will also be using in all of our balancing configs Layer 7 checks to make sure each node is in ready state to receive traffic.

frontend cinc-opensearch-cluster

mode http

bind 10.0.0.100:9200 ssl crt /etc/ssl/haproxy

# Workaround of Opensearch limit of 10k search

acl is_search path_reg ^/chef/_search$

http-request set-path /chef/_search?track_total_hits=true if is_search

default_backend cinc-opensearch

backend cinc-opensearch

balance roundrobin

option httpchk GET /_plugins/_security/health

http-check expect status 200

server backend-1 backend-1.example.com:9200 check-ssl check ssl verify none

server backend-2 backend-2.example.com:9200 check-ssl check ssl verify none

server backend-3 backend-3.example.com:9200 check-ssl check ssl verify none

server backend-4 backend-4.example.com:9200 check-ssl check ssl verify none

PostgreSQL

Installation

For cluster management of PostgreSQL we will be using etcd and patroni.

Always check the Chef release notes which version of PostgreSQL is supported.

First you will need to download etcd binaries named etcd and etcdctl and place them in /usr/local/bin/ and give them proper permissions as well as create data and config dirs.

chown root:root /usr/local/bin/etcd* &&\

chmod 755 /usr/local/bin/etcd* &&\

mkdir -p /var/lib/etcd &&\

mkdir -p /etc/etcd &&\

groupadd --system etcd &&\

useradd -s /sbin/nologin --system -g etcd etcd &&\

chown -R etcd:etcd /var/lib/etcd /etc/etcd &&\

chmod 700 /var/lib/etcd

Then you will need to download the repo and run the installation.

dnf install -y https://download.postgresql.org/pub/repos/yum/reporpms/EL-8-x86_64/pgdg-redhat-repo-latest.noarch.rpm &&\

dnf -qy module disable postgresql &&\

dnf install -y postgresql13-server-13.14 patroni patroni-etcd

Configuration

Once installed, create /data/postgresql dir and adjust ownership:

mkdir /data/postgresql && chown postgres:postgres /data/postgresql && chmod 700 /data/postgresql

Next, we will configure the systemd file /etc/systemd/system/etcd.service for etcd, edit the values to reflect your setup for each server in the cluster. Here is an example of it:

[Unit]

Description=etcd

Documentation=https://github.com/etcd-io/etcd

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

User=etcd

ExecStart=/usr/local/bin/etcd \

--name backend-1.example.com \

--data-dir=/var/lib/etcd \

--initial-advertise-peer-urls https://10.0.0.1:2380 \

--listen-peer-urls https://10.0.0.1:2380 \

--listen-client-urls https://10.0.0.1:2379,http://127.0.0.1:2379 \

--advertise-client-urls https://10.0.0.1:2379 \

--initial-cluster-token etcd-cluster-1 \

--initial-cluster backend-1.example.com=https://backend-1.example.com:2380,backend-2.example.com=https://backend-2.example.com:2380,backend-3.example.com=https://backend-3.example.com:2380,backend-4.example.com=https://backend-4.example.com:2380 \

--initial-cluster-state new --auto-tls --peer-auto-tls

[Install]

WantedBy=multi-user.target

Then configure patroni and postgresql inside it. Use the following configuration as template.

scope: cinc_pgsql_cluster

namespace: /service/

name: backend-1

restapi:

listen: 10.0.0.1:8008

connect_address: 10.0.0.1:8008

allowlist_include_members: true

certfile: /etc/ssl/server-cert.pem

keyfile: /etc/ssl/private.key

cafile: /etc/ssl/root-ca.pem

ctl:

insecure: true

etcd3:

hosts: 10.0.0.1:2379,10.0.0.2:2379,10.0.0.3:2379,10.0.0.4:2379

protocol: https

bootstrap:

dcs:

ttl: 30

loop_wait: 10

retry_timeout: 10

maximum_lag_on_failover: 1048576

postgresql:

use_pg_rewind: true

use_slots: true

parameters:

max_connections: 400

initdb:

- encoding: UTF8

- data-checksums

pg_hba:

- host replication replicator 127.0.0.1/32 md5

- host replication replicator 10.0.0.1/32 md5

- host replication replicator 10.0.0.2/32 md5

- host replication replicator 10.0.0.3/32 md5

- host replication replicator 10.0.0.4/32 md5

- host all all 0.0.0.0/0 md5

users:

admin:

password: $pass

options:

- createrole

- createdb

postgresql:

listen: 0.0.0.0:5432

connect_address: 10.0.0.1:5432

data_dir: /data/postgresql/

bin_dir: /usr/pgsql-13/bin

pgpass: /tmp/pgpass

authentication:

replication:

username: replicator

password: "$pass"

superuser:

username: postgres

password: "$pass"

parameters:

max_connections: 400

tags:

nofailover: false

noloadbalance: false

clonefrom: false

nosync: false

That is it, make sure to enable etcd and partoni to start on boot. Patroni will be the one managing PostgreSQL, starting and stopping it. Run this on all nodes.

systemctl start etcd && systemctl start patroni && systemctl enable etcd && systemctl enable patroni

Check that data is properly placed and that there is elected leader:

# patronictl -c /etc/patroni/patroni.yml list

+ Cluster: cinc_pgsql_cluster (7416721032775114149) -+----+-----------+

| Member | Host | Role | State | TL | Lag in MB |

+----------------+-------------+---------+-----------+----+-----------+

| backend-1 | 10.0.0.1 | Leader | running | 4 | |

| backend-2 | 10.0.0.2 | Replica | streaming | 4 | 0 |

| backend-3 | 10.0.0.3 | Replica | streaming | 4 | 0 |

| backend-4 | 10.0.0.4 | Replica | streaming | 4 | 0 |

+----------------+-------------+---------+-----------+----+-----------+

Balancing

For PostgreSQL we will be using L4 balancing on keepalived with L7 checks, checking for leader and sending it r/w requests. As mentioned before, you could use cloud native network balancer as well.

###########################

# cinc-postgresql-cluster #

###########################

virtual_server 10.0.0.100 5432 {

delay_loop 2

lb_algo rr

lb_kind DR

protocol TCP

real_server 10.0.0.1 5432 {

weight 1

SSL_GET {

connect_timeout 2

connect_port 8008

url {

path /read-write

status_code 200

}

}

}

real_server 10.0.0.2 5432 {

weight 1

SSL_GET {

connect_timeout 2

connect_port 8008

url {

path /read-write

status_code 200

}

}

}

real_server 10.0.0.3 5432 {

weight 1

SSL_GET {

connect_timeout 2

connect_port 8008

url {

path /read-write

status_code 200

}

}

}

real_server 10.0.0.4 5432 {

weight 1

SSL_GET {

connect_timeout 2

connect_port 8008

url {

path /read-write

status_code 200

}

}

}

}

MinIO

MinIO replaces Bookshelf service as another object storage. Here you could also use S3 bucket for this. We will choose Minio as an on-premise solution. Bookshelf stores cookbooks and if you don’t have some shared disk between frontend servers, you need to have all the info in one place so all frontends can have consistent responses.

Installation

First we will need to install the service on all servers and create a user and disk. Consult official docs.

wget https://dl.min.io/server/minio/release/linux-amd64/archive/minio-20240913202602.0.0-1.x86_64.rpm -O minio.rpm &&\

dnf install minio.rpm -y &&\

groupadd -r minio-user &&\

useradd -M -r -g minio-user minio-user &&\

mkdir -p /data/minio/dir{1..4} &&\

chown -R minio-user:minio-user /data/minio

Configuration

MinIO in clustered setup requires each server to have 4 disks. You could configure them using LVM or simply use 4 directories. I’ve chosen the second option as coobook data is also stored on git and is not critical.

As a consequence of using directories, the minio command (mc) might not show proper disk usage.

The configuration will be done on /etc/default/minio through env variables and should look something like this:

MINIO_VOLUMES="https://backend-{1...4}.example.com:9900/data/minio/dir{1...4}"

MINIO_OPTS="--console-address :9001 --address 0.0.0.0:9900"

MINIO_ROOT_USER=minioadmin

MINIO_ROOT_PASSWORD=$pass

MINIO_PROMETHEUS_AUTH_TYPE=public

Here we are using a dedicated MinIO cluster so we can use admin user. In case you have more buckets for other services, you will need to granulate role access per bucket.

Then let’s configure certificates. We will be using the same self-signed ones. By default it uses path /home/minio-user/.minio/certs/CAs, but this can be overridden with environment variables. We’ll use the default, so let’s create directory:

mkdir -p /home/minio-user/.minio/certs/CAs &&\

chown -R minio-user:minio-user /home/minio-user/

Your file structure should look something like this and minio-user should own all these files:

# ll /home/minio-user/.minio/certs/

CAs

private.key

public.crt

# ll /home/minio-user/.minio/certs/CAs

backend-1.crt

backend-2.crt

backend-3.crt

backend-4.crt

root-ca.crt

I will use admin user in Minio since there will be no other users or buckets in this cluster, just CINC. Make sure cert file ownership is correct.

Start and enable the service:

systemctl start minio && systemctl enable minio

You will need to create a bucket at the end of the setup, once you have the entire setup complete and you have created the organization. You can configure MC(minio client) to do this or login to your server URL on port 9001(https) in the browser and add it there.

The bucket name you will need to create is organization-$org-id, for example organization-928a25c3cce4bc623572df8a7764c185.

The ID is the guid under which organization was created in Cinc, so you will need to look that up with command after you create your organization:

# knife org show my-org

full_name: My Organization

guid: 928a25c3cce4bc623572df8a7764c185

name: my-org

Another important thing to note: When you specify in settings that the AWS S3 bucket is replacing the Bookshelf, it will follow the AWS bucket naming convention. So in cinc-server.rb below the following 2 settings will point to url cinc-minio-cluster.example.com

bookshelf['external_url'] = 'https://example.com'

opscode_erchef['s3_bucket'] = 'cinc-minio-cluster'

Balancing

Use L4/L7 balancing, and configure on haproxy L7 healthcheck like this:

frontend cinc-minio-cluster

mode http

bind 10.0.0.100:443 ssl crt /etc/ssl/haproxy

default_backend cinc-minio

backend cinc-minio

balance roundrobin

option httpchk /minio/health/live

http-check expect status 200

server backend-1 backend-1.example.com:9900 check-ssl check ssl verify none

server backend-2 backend-2.example.com:9900 check-ssl check ssl verify none

server backend-3 backend-3.example.com:9900 check-ssl check ssl verify none

server backend-4 backend-4.example.com:9900 check-ssl check ssl verify none

CINC frontend services

Installation and configuration

Run the omnitruck script to perform initial installation.

curl -L https://omnitruck.cinc.sh/install.sh | sudo bash -s -- -P cinc-server

We can use here for nginx certificates we generated in /etc/cinc-project or we can have them be auto-generated during install.

Then using official docs we will compose config /etc/cinc-project/cinc-server.rb for our usecase:

#PostgreSQL

postgresql['external'] = true

postgresql['vip'] = 'cinc-postgresql-cluster.example.com'

postgresql['db_superuser'] = '$user'

postgresql['db_superuser_password'] = '$pass'

#Opensearch

opensearch['external'] = true

opensearch['external_url'] = 'https://cinc-opensearch-cluster.example.com:9200'

opscode_erchef['search_auth_username'] = '$user'

opscode_erchef['search_auth_password'] = '$pass'

opscode_erchef['search_ssl_verify'] = false

#MinIO

bookshelf['enable'] = false

bookshelf['vip'] = 'cinc-minio-cluster.example.com'

bookshelf['external_url'] = 'https://example.com'

bookshelf['access_key_id'] = '$user'

bookshelf['secret_access_key'] = '$pass'

opscode_erchef['s3_bucket'] = 'cinc-minio-cluster'

#Nginx certificates in case you don't want to use auto-generated(optional)

nginx['ssl_certificate'] = '/etc/cinc-project/server.pem'

nginx['ssl_certificate_key'] = '/etc/cinc-project/server.key'

#Nginx optimizations

nginx['client_max_body_size'] = '500m'

nginx['worker_processes'] = 10

nginx['worker_connections'] = 10240

nginx['keepalive_requests'] = 200

nginx['keepalive_timeout'] = '60s'

Then run the command to deploy and configure local services and configure backend services:

cinc-server-ctl reconfigure

That will install local services, connect to opensearch and postgresql, create databases, users, indexes etc.

In case you use your own certificates make sure they are owned by user cinc after this step.

First frontend node has been added.

Add more frontend VM’s

In order to bootstrap another frontend node, you will need to first run the omnitruck script on the new node, then after that is done you will need to transfer the following files from bootstrapped node to new node on the same location:

/etc/cinc-project/cinc-server.rb

/etc/cinc-project/dark_launch_features.json

/etc/cinc-project/pivotal.pem

/etc/cinc-project/pivotal.rb

/etc/cinc-project/private-chef.sh

/etc/cinc-project/private-cinc-secrets.json

/etc/cinc-project/webui_priv.pem

/etc/cinc-project/webui_pub.pem

/var/opt/cinc-project/bootstrapped

/var/opt/cinc-project/upgrades/migration-level

Then run the cinc-server-ctl reconfigure and this way it will skip creating users and it will have their credentials and see they are already created. More nodes can be added this way.

Create balancing for the nodes on Haproxy.

Balancing

The balancing is very simple as well to configure. You could use directly keepalived or if you want some more logging or traffic manipulation features, haproxy is a very easy software to do this with. I will be using it here as well.

frontend cinc-server-cluster

mode http

bind 10.0.0.101:443 ssl crt /etc/ssl/haproxy

default_backend cinc-server

backend cinc-server

balance roundrobin

server frontend-1 frontend-1.example.com:443 check ssl verify none

server frontend-2 frontend-1.example.com:443 check ssl verify none

Conclusion

Additionally you can deploy Prometheus exporters and monitor the whole cluster with Prometheus/Grafana. You are now ready to create your organization or restore data from existing. Before uploading cookbooks don’t forget to also create buckets in MinIO with proper naming as discussed before. Your Cinc is now a highly available cluster. Check out references for additional information.